Crafting Smarter LLM Prompts: A Brief Guide to Prompt Engineering

In the rapidly evolving landscape of AI, we have seen a massive growth in Large Language Models (LLMs). In fact, just two weeks ago, OpenAI released GPT-4o to the public, the newest version of their extremely popular chatbot ChatGPT. It has become clear, now more than ever, that being able to effectively leverage the full potential of LLMs has become a crucial skill in the AI world.

One thing that influences how effective an LLM can be is how well your prompts are designed. That's where prompt engineering comes in! Good prompts can make your AI responses clearer, more accurate, and even more creative. In this post, we'll share simple tips and tricks to help you master the art of prompt engineering and get the most out of your chatbot.

K-Shot Prompting

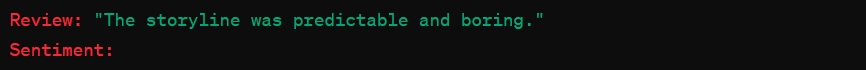

K-shot prompting refers to providing k explicit examples of the task you would like the LLM to perform inside of the prompt itself. Suppose your task is to classify a movie review as positive or negative. You can always choose not to use k-shot prompting (also known as 0-shot prompting) and just ask the LLM directly:

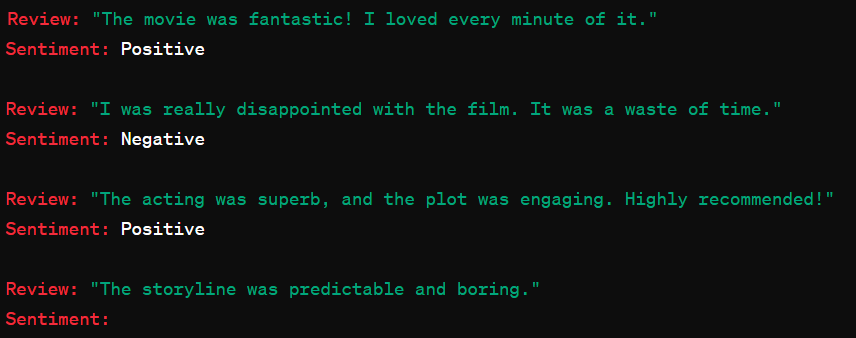

However, if the LLM has not been given any context or sentiment training beforehand, there exists some chance that the LLM might incorrectly classify this sentiment as positive. We can augment this prompt with k=3 examples to employ 3-shot prompting:

Now, we are providing the LLM with context, even if it did not have any beforehand, and it will output the correct sentiment with high accuracy. Studies have shown that few-shot prompting widely improves results over 0-shot prompting, so be sure to give this a shot!

Chain-Of-Thought Prompting

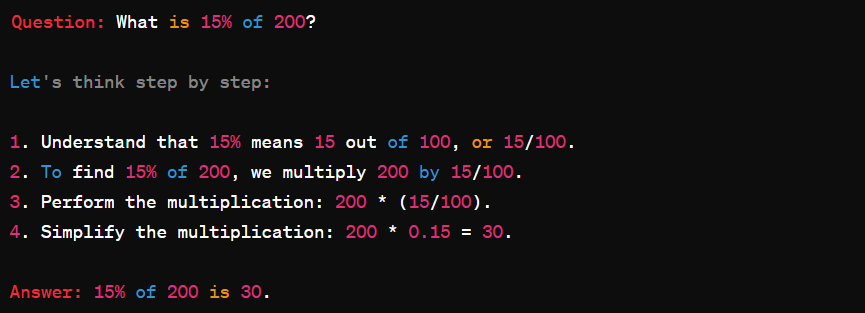

Chain-of-Thought prompting refers to asking the LLM to emit intermediate reasoning steps as part of its response. This is done usually through k-shot prompting by providing an example of how the LLM can emit these steps. Take, for example, this simple math problem asking for a percentage calculation. We ask the question, explain the steps, as well as state the answer, all inside of a singular prompt:

By providing these necessary steps as a prompt, we are ensuring that the LLM can now use what it learned to solve more complex math problems involving percentages. Albeit, this is a very simple example for purposes of demonstration, but Chain-Of-Thought prompting can prove to be very useful for more complicated scenarios. It can also be used as a means of debugging; if the LLM is clearly giving the wrong answer to a prompt, then one can simply go through the steps of reasoning that the LLM took to see where it went wrong.

Step-Back Prompting

Step-Back prompting refers to asking the LLM to take a "step back" and identify the high level concepts that might be necessary to perform the intended task. This style of prompting could be interpreted as a specific type of Chain-Of-Thought prompting, where the intermediate reasoning step is to look at the problem holistically. Consider this following chemistry problem:

By asking the LLM to take a "step back" and to identify the overarching relevant principles, we are ensuring that the LLM can back up its answer with facts and proof. Grounding its response with the correct principles and formulas, the LLM yields higher accuracy, as opposed to attempting the problem from the get-go with no context and outputting a potentially incorrect answer.

There is a common theme present amongst these prompt engineering techniques - providing the LLM with necessary context to solve the problem within the prompt itself. While an arbitrary LLM might already have this context in its pretrained data, in some cases it might not, which can lead to undesirable results. That's why prompt engineering is important; by ensuring that the LLM has this context within the prompt, we increase accuracy and efficiency.

These are just a few of the many different strategies that exist for prompt engineering. We hope that these techniques help in crafting smarter prompts and receiving better answers. Happy Prompting!