Lightning speed AI code generation with Diffusion LLM

The speed at which LLMs perform are starting to become bottlenecks in scaling up usage. It is not unusual to wait for minutes, or even tens of minutes, for your AI code assistant to spit out some code.

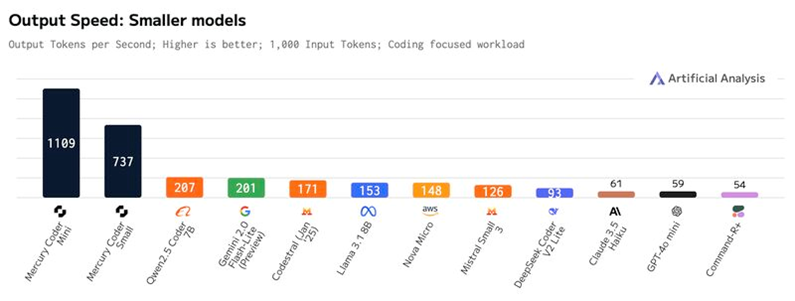

Recently, I played around with Mercury Coder Mini, a Diffusion LLM (as opposed to a Transformer LLM like ChatGPT) and is specifically optimized for code generation. The speed in which it generates code is exceptional, possibly becoming a future game changer. It's reported to achieve excellent performance across various coding benchmarks, often surpassing other models like GPT-4o Mini and Claude 3.5 Haiku while being up to 10x faster in generating tokens per second compared to other models.

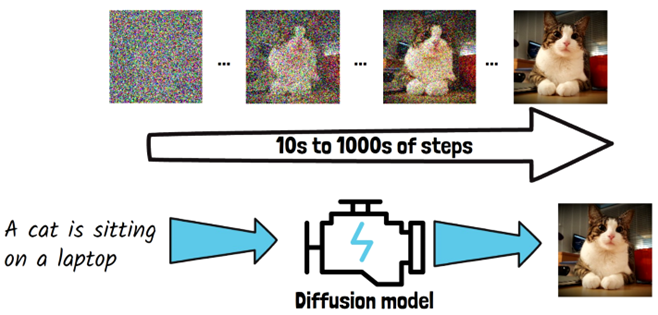

Diffusion Models power AI systems like OpenAI Sora and are used primarily to create images and videos. Diffusion LLM applies the diffusion model to text-based models. It generates the entire response all at once in a rough way and iteratively refines it, similar in concept to diffusion text-to-image models.

Understanding Sequential Token Generation

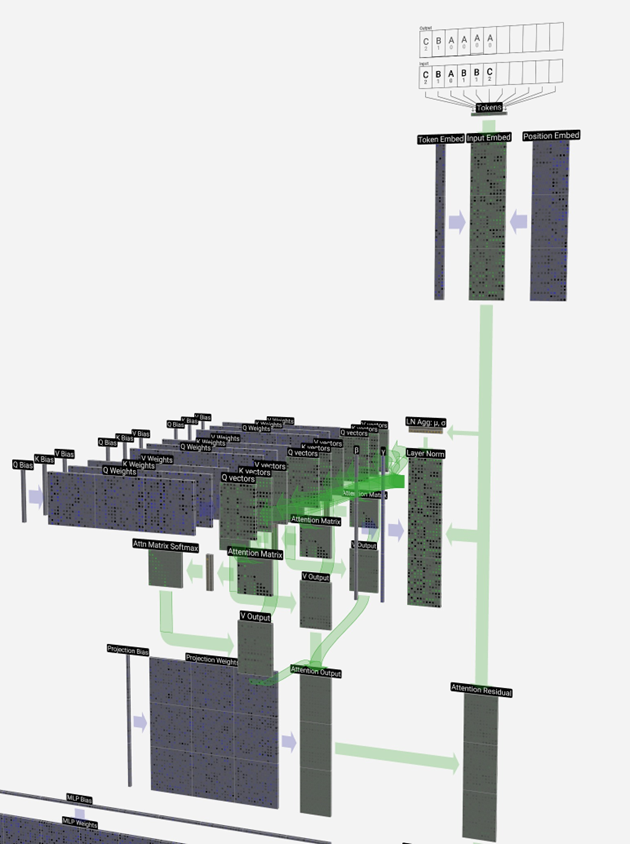

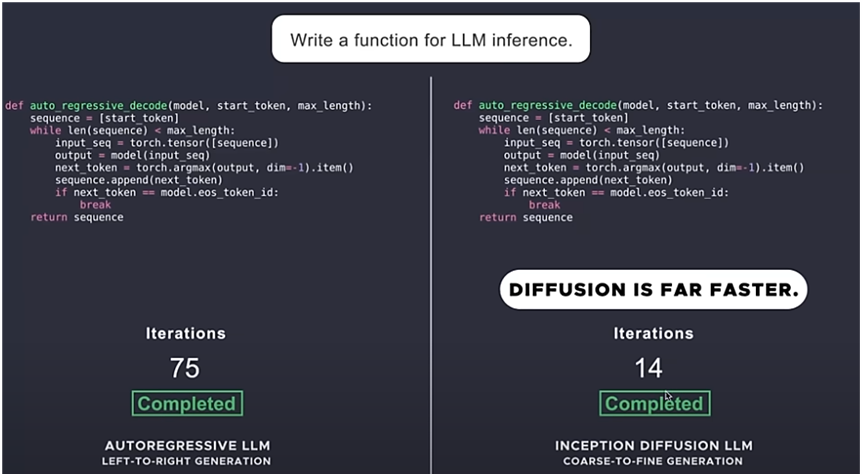

ChatGPT, built upon the GPT (Generative Pre-trained Transformer) architecture, is an autoregressive LLM. Autoregressive LLMs generate content from left-to-right. The text is generated by predicting one token at a time, conditioned on all previously generated tokens. This process mirrors human language production, where each word depends on the preceding context.

During this process, there are 2 phases involved in LLM inferencing:

- Prefill (init) phase

- Decode (generate) phase

Alex Razvant wrote a very good article describing LLM inferencing, which I am summarizing here.

LLM Prefill (Init) Phase

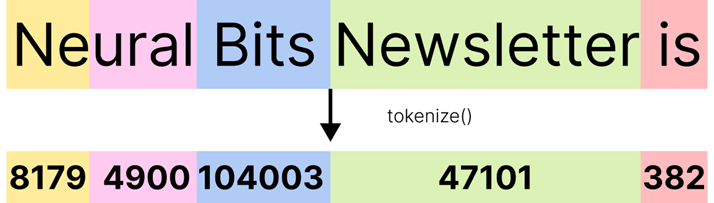

Prefill is also known as processing the input. LLM converts it into a series of prompts or input tokens. A token is a unit of text that represents a word or a portion of a word. For the English language, a token is 0.75 words – or 4 characters.

The LLM takes tokens as input and computes intermediate states (keys and values). These are then used to generate the "first" new token. During prefill, the text is tokenized (see example in figure above).

LLM Decode (Generate) Phase

Once generated, each token is turned into a vector embedding. Vector embedding = a numerical representation that the model can understand and make inferences from. These embeddings are then processed by the LLM in order to generate an appropriate output for the user. These are then converted into completion tokens (aka output tokens), which are generated output one at a time until it reaches a stopping criterion, such as the token limit number or one of a list of stop words

Each sequential output token needs to know all the previously generated tokens’ previous output states (keys and values). This generate phase is memory-bound.

Diffusion LLM, created by Inception

Inception (or Inception Labs?) was founded by professors from Stanford, UCLA, and Cornell—pioneers in diffusion modeling and cornerstone AI technologies, including Flash Attention, Decision Transformers, and Direct Preference Optimization. There's not a lot of information on them, but I'm guessing they were founded in 2024.

In a TechCrunch article last month, their co-founder was quoted saying that Inception has already secured several customers (who?), including unnamed Fortune 100 companies, by addressing their critical need for reduced AI latency and increased speed. "What we found is that our models can leverage the GPUs much more efficiently," Stefano Ermon said. Inception offers an API as well as on-premises and edge device deployment options, support for model fine-tuning, and a suite of out-of-the-box DLMs for various use cases. The company claims its DLMs can run up to 10x faster than traditional LLMs while costing 10x less.

What is Mercury Code Mini?

Mercury Coder Mini has shown impressive performance, being tied for 2nd place in benchmarks and outperforming speed-optimized models like GPT-4o Mini and Gemini-1.5-Flash. It is also noted for being about 4x faster than GPT-4o Mini. It can generate text at speeds of 1,000+ tokens per second, making it up to 10x faster than other AI models

Overview of Diffusion Text-to-Image Models

Diffusion models power AI systems like OpenAI Sora and are mainly used to create images, video, and audio. It starts with completely noisy image and gradually refines it (does not generate 1 pixel at a time). Diffusion models receives a prompt as input. The model learns to gradually remove noise from an image in order to generate a clear image. The model starts with a randomly sampled noise image and in each step, removes some of the noise. The noise removal is conditioned on the input prompt, ending up with an image that matches the prompt. Comes with a latency drawback.

How Diffusion LLM Works

Diffusion LLM applies the diffusion model to text-based models. It generates the entire response all at once in a rough way and iteratively refines it, similar to diffusion text-to-image models.

My Results

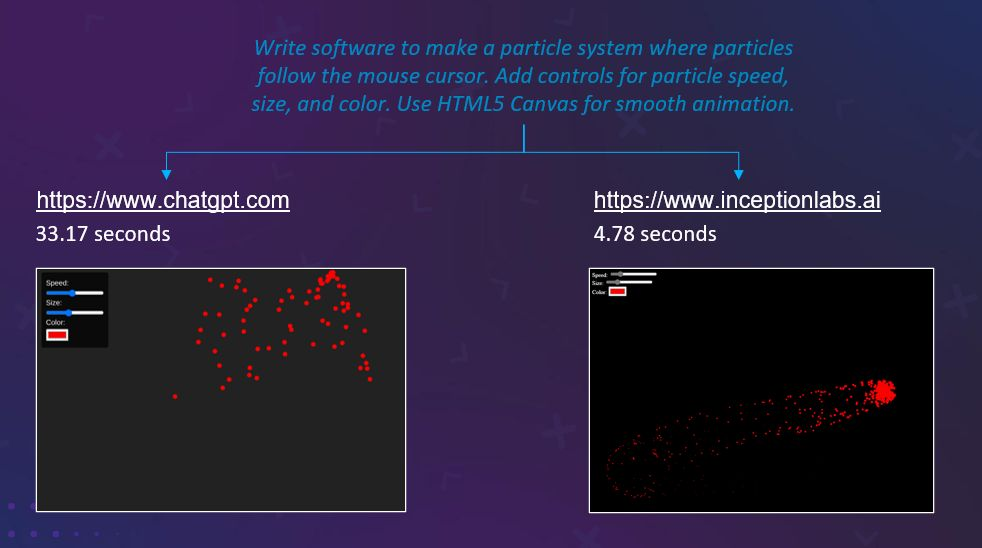

If you take the prompt shown in the following image and run it through both ChatGPT and Inception Labs, the speed advantage of the diffusion LLM is undeniable.

The quality of this simple code generation was also great, surpassing the quality provided by ChatGPT. Mercury Coder Mini was optimized for code generation after all.

But as I progressed into more medium complexity code, I encountered non-working solutions, and inability to fix the issues I ran into.

We'll have to keep an eye out on how this dLLM will continue to evolve, but it sure is interesting.